A Smarter Way to Navigate: Revolutionary Camera-Only Obstacle Avoidance for Autonomous Vehicles

As the race towards fully autonomous vehicles accelerates, ensuring safe navigation amidst unpredictable obstacles remains a paramount challenge. Researchers from Ho Chi Minh City University of Technology and Education alongside Chungbuk National University have unveiled an innovative solution in their recent study, which proposes a streamlined obstacle avoidance system that uses only a camera for perception.

Transforming Perception with YOLOv11

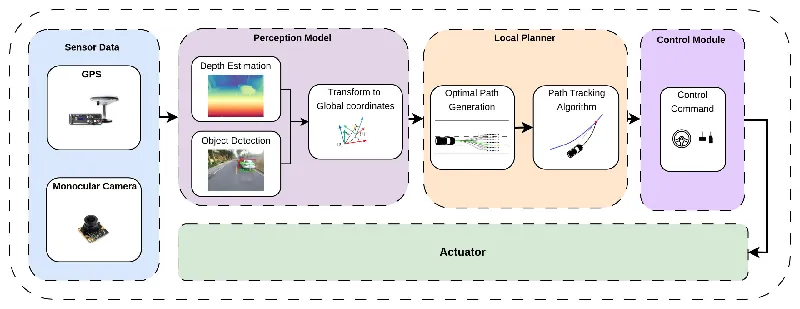

The research highlights an efficient obstacle avoidance pipeline that leverages the You Only Look Once (YOLOv11) model for real-time object detection. Unlike traditional methods that rely heavily on costly 3D LiDAR systems, this novel approach simplifies perception by using a single monocular camera. This significant shift not only reduces expenses but also simplifies the hardware requirements, making autonomous technology more accessible.

In their experiments, the researchers compared several YOLO models and found YOLOv11 to provide the best accuracy without compromising on speed. This model achieved an impressive frame per second (FPS) rate of 84, crucial for real-time applications where every millisecond counts in hazardous situations.

Depth Estimation: Seeing Beyond the Surface

A major component of this system is depth estimation, which empowers the vehicle to gauge distances to obstacles accurately. The researchers employed a state-of-the-art model called Depth Anything V2, which excels at transforming 2D images into 3D perceptions. By continuously estimating distances, the vehicle can better understand its surroundings and react promptly, enhancing overall safety.

Through methodical tests, Depth Anything V2 demonstrated superior performance, maintaining low errors across various distance ranges, thereby solidifying its role as a reliable tool in the vehicle's navigation arsenal.

Optimal Path Planning for Smooth Navigation

Beyond perception, the proposed system integrates advanced motion planning techniques, specifically the Frenet-Pure Pursuit methods, to optimize the path an autonomous vehicle takes in real-time. This dual approach enables vehicles to adjust their trajectory seamlessly to avoid obstacles while maintaining comfort and safety for passengers.

Using simulations conducted on a university campus, the team demonstrated the system's efficacy in handling diverse scenarios, from maneuvering around a single obstacle to tackling multiple blockers in tight spaces. The vehicle's ability to adapt its steering angle showcased its nimbleness and reliability.

Real-World Testing Brings Promising Results

To validate their approach, the team conducted rigorous experiments in controlled environments, simulating everyday driving conditions. Their findings revealed that the integrated system not only performed well in recognizing and navigating around obstacles but also delivered consistently smooth trajectory adjustments.

By merging cutting-edge computer vision with pragmatic engineering, this research sets a new standard for obstacle avoidance in autonomous vehicles, promising safer roads and smarter navigation techniques.

The Road Ahead: Innovations on the Horizon

While the current implementation demonstrates significant advancements, the researchers acknowledge limitations and express a desire to enhance depth estimation accuracy further. Future iterations may expand the camera system's breadth, incorporating additional cameras to cover blind spots, thus elevating safety standards even more.

This breakthrough demonstrates that innovative research can redefine how autonomous vehicles perceive and interact with their environments, pushing the boundaries of technology toward a future where self-driving cars can navigate safely and efficiently in real-world scenarios.