Cracking the Code: How K-Fold Cross-Validation Affects Lasso Regression Consistency

In a compelling new paper by Mayukh Choudhury and Debraj Das from the Indian Institute of Technology Bombay, the authors delve into the complexities of Lasso regression—a powerful tool in statistics for variable selection and regularization. Their research critically examines the implications of using K-fold cross-validation (CV) to choose the penalty parameter in Lasso, leading to groundbreaking conclusions about its performance in variable selection consistency.

The Importance of Lasso in Statistical Modeling

The Least Absolute Shrinkage and Selection Operator, known as Lasso, has gained popularity for its ability to enhance predictive accuracy and interpretability through variable selection. However, the effectiveness of Lasso hinges significantly on the choice of its penalty parameter, which governs the amount of shrinkage applied to the coefficients of the model.

The Unveiled Variability: Consistency vs. Selection

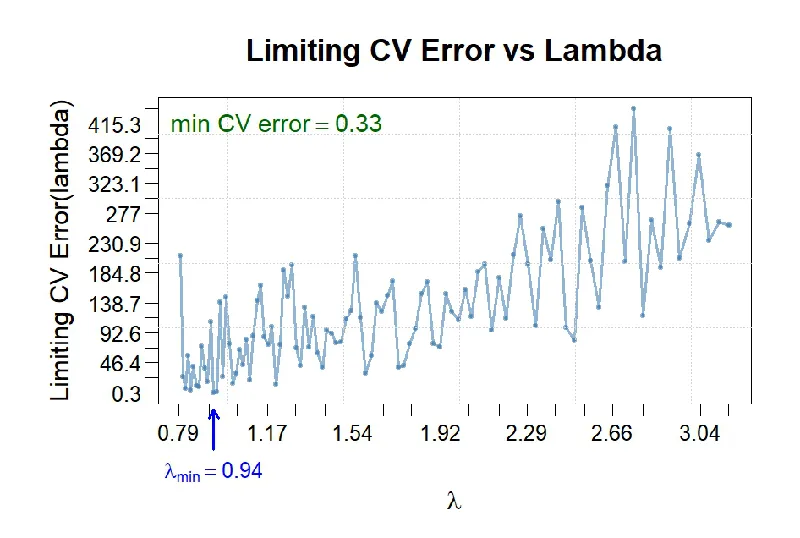

Choudhury and Das's research reveals a pivotal finding: depending on how the penalty parameter is selected—specifically through K-fold CV—Lasso attains different properties of consistency. While the traditional expectation may lead one to believe that using K-fold CV will maintain variable selection consistency, the paper disputes this notion.

By analyzing a fixed-dimensional heteroscedastic linear regression model, the authors demonstrate that Lasso with a K-fold CV-derived penalty is actually n1/2 consistent—that is, it converges to the true parameter values at a rate of √n—but fails to achieve variable selection consistency. This misconception could have important ramifications for practitioners who rely on Lasso in their analyses.

Bootstrap Methods: A Solution for Inferential Needs

Recognizing the limitations of K-fold CV in ensuring variable selection, the authors introduce the Bootstrap method as a viable alternative for drawing statistical inferences in Lasso regression. Their findings suggest that, despite K-fold CV's shortcomings in this regard, the Bootstrap approach offers a robust mechanism for approximating the distribution of Lasso estimators—a major advancement in the field.

Conclusion: Rethinking Lasso Implementation

This remarkable study effectively highlights the nuances in selecting penalty parameters for Lasso regression and underscores the necessity for researchers and practitioners to reconsider their methodologies. By questioning the presumed compatibility of K-fold CV with variable selection, this paper illuminates a critical gap in the understanding of Lasso's application in high-dimensional statistics.

Overall, the work of Choudhury and Das not only adds depth to the existing literature but also provides practical recommendations that could enhance the reliability and effectiveness of statistical modeling in various fields.