Cracking the Code of Comedy: How Large Language Models Fail to Understand Humor

In the vast landscape of artificial intelligence, humor has remained a particularly challenging frontier. A recent study titled "Comparing Apples to Oranges: A Dataset & Analysis of LLM Humour Understanding" dives deep into the capacities of large language models (LLMs) to comprehend and explain various forms of humor. Researchers Tyler Loakman, William Thorne, and Chenghua Lin from the Universities of Sheffield and Manchester put LLMs to the test in an effort to uncover whether these complex machines can understand not just traditional puns, but also topical jokes that reflect our ever-evolving cultural landscape.

From Puns to Pop Culture: The Dataset

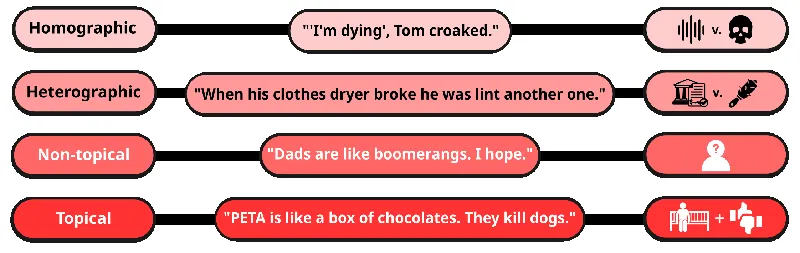

The researchers curated a dataset of 600 jokes categorized into four distinct types: homographic puns, heterographic puns, internet humor, and topical jokes. These categories explore the spectrum of humor complexity, from straightforward wordplay to nuanced commentary on contemporary events. For instance, while a traditional pun might rely on a simple play of words ("What do you call a fake noodle? An impasta!"), topical jokes often require a deeper understanding of current affairs and cultural references.

LLMs Under Scrutiny

Using their dataset, the researchers assessed various LLMs, including models from GPT-4 and Llama 3, on their ability to explain jokes across all categories. Surprisingly, the findings revealed that none of the LLMs could consistently generate satisfactory explanations for jokes that required even moderate levels of contextual knowledge, highlighting a significant gap in current AI capabilities.

The Reality Check: Can AI Really Understand Humor?

The study's results suggest that LLMs are much better at deciphering simple puns than they are at unpacking more complex humor. For example, while calculations showed that traditional puns and non-topical jokes yielded much higher success rates in explanation accuracy, topical jokes posed a formidable challenge. These require models to extract and apply knowledge of external cultural contexts, which today's LLMs struggle to do.

What's Next for AI and Humor?

The implications of this research extend beyond the realm of humor itself. Understanding humor is integral to grasping the nuances of human interaction and communication. As these models fail to engage with humor critically, they also falter in recognizing sarcasm, irony, and other forms of sophisticated human expression. The researchers advocate for a broader focus in AI humor studies, one that encompasses a range of comedic styles and complexities rather than narrowing in on simple puns.

In conclusion, while LLMs showcase impressive feats of language processing, their inability to effectively navigate the nuances of humor underscores a profound area for improvement. As AI continues to evolve, understanding humor may well prove to be a key benchmark of its progress in emulating human-like comprehension in all its forms.