Decoding Radiologists' Eye Movements: A New Frontier in Medical Imaging Analysis

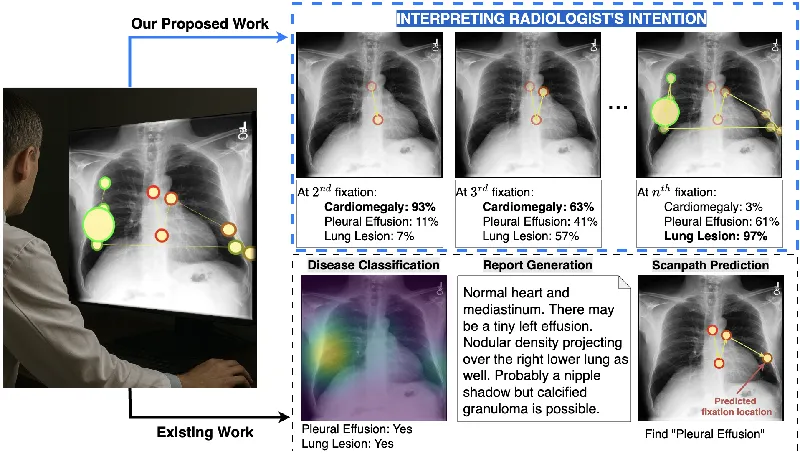

In an era where artificial intelligence is revolutionizing healthcare, a groundbreaking study from researchers at the University of Arkansas, University of Liverpool, and the University of Houston unveils an innovative approach to understanding how radiologists interpret chest X-rays. This research addresses a critical gap in existing AI models by emphasizing the importance of understanding the cognitive intentions behind each gaze within medical imaging diagnostics.

The Importance of Eye Movements in Diagnosis

Radiologists rely heavily on their eye movements to navigate complex medical images, effectively searching for signs of disease while adhering to a cognitive checklist. The research suggests that current AI systems predominantly mimic these visual search patterns, failing to interpret the underlying intentions associated with each fixation. This has raised essential questions: What are radiologists really looking for at any given moment during their analysis?

Introducing RadGazeIntent

To bridge this gap, the authors introduced RadGazeIntent, a deep learning framework able to process eye-tracking data from radiologists and predict their intentions dynamically. Unlike previous models that merely classify diseases or generate reports based on visual cues, RadGazeIntent captures the nuances of the radiologist's search process. This model is designed to understand three types of search strategies:

- Systematic Sequential Search (RadSeq): Following a checklist of findings.

- Uncertainty-Driven Exploration (RadExplore): Responding opportunistically to visual cues.

- Hybrid Patterns (RadHybrid): Combining broad initial scans with focused searches.

This distinction is crucial as it helps in fostering more interactive AI systems that can work in tandem with human expertise.

Evaluation and Findings

In rigorous testing, RadGazeIntent outperformed existing baseline methods across all intention-labeled datasets. The results showcased its capacity to not only identify the specific findings radiologists were examining but also to provide confidence scores for these predictions. The model effectively uncovers the intended purpose behind gaze patterns, whether for systematic checklist adherence or exploratory searches.

The Broader Implications

Understanding the intention behind radiologists' eye movements extends beyond just improving diagnostic accuracy. It opens the door for developing advanced interactive systems that can adapt to the diagnostic focuses of human experts in real time. This could potentially lead to enhanced collaborative tools in radiology, surgical navigation, and even educational platforms, enriching the experiences of both practitioners and learners in the medical field.

This research not only sheds light on the cognitive processes underlying visual search behavior in radiology but also lays the groundwork for future innovations that can enhance the synergy between AI technology and human expertise in healthcare.