Revolutionizing 3D Point Tracking: Discover How SpatialTrackerV2 Sets New Standards

In a groundbreaking advancement for visual technology, researchers from institutions like Zhejiang University and Oxford have unveiled SpatialTrackerV2, a cutting-edge method that significantly enhances 3D point tracking from monocular videos. This innovative model not only outperforms existing techniques by a remarkable 30% but also operates at an astounding speed, running 50 times faster than its predecessors.

The Challenge of 3D Point Tracking

3D point tracking involves capturing the long-term trajectories of points in three-dimensional space, typically using video footage. Its applications span across various fields including robotics, video generation, and 3D reconstruction. Conventional methods, which often relied on complex, multi-step pipelines, tended to be computationally intensive and inefficient, particularly when processing everyday videos.

A Unified Approach with SpatialTrackerV2

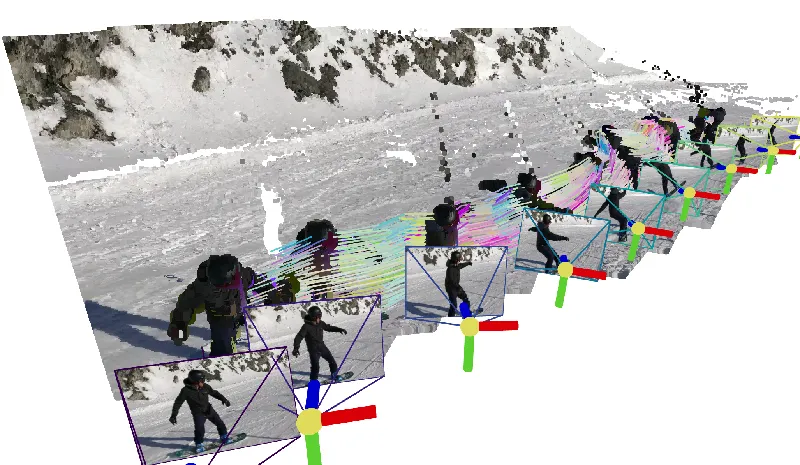

SpatialTrackerV2 breaks new ground by integrating core components such as depth estimation, camera pose computation, and object motion analysis into a single, efficient feed-forward model. Unlike previous models that required ground-truth 3D tracking data for training, SpatialTrackerV2 leverages a fully differentiable architecture. This allows it to train effectively on a wide range of diverse datasets—including synthetic and unlabeled real-world data—thus achieving better generalization and accuracy in various scenarios.

How It Works

The model's architecture is divided into a front-end and back-end. The front-end focuses on estimating video depth and initializing camera poses, while the back-end employs a Joint Motion Optimization Module to refine these estimates continuously. By decomposing the tracking process into distinct components—video depth, camera motion, and object motion—it effectively minimizes errors and boosts overall performance.

Unmatched Performance Metrics

According to rigorous evaluations conducted on the TAPVid-3D benchmark, SpatialTrackerV2 has set new records, outperforming existing models in core metrics such as Average Jaccard and Average 3D Position Accuracy. Its ability to seamlessly estimate consistent video depth and camera poses demonstrates a leap in dynamic reconstruction accuracy, ensuring that 3D trajectories are maintained even under challenging conditions like occlusions and fast motion.

Implications for Future Applications

The implications of SpatialTrackerV2 extend beyond academic curiosity. Its potential for integration into everyday applications such as augmented reality, autonomous driving, and interactive gaming is substantial. This technology promises to bridge the gap between physical and virtual worlds, paving the way for advancements in robotics and intelligent systems.

With the remarkable capabilities of SpatialTrackerV2, the future of 3D point tracking is not only efficient but also more accessible, allowing for broader implementation across various cutting-edge technologies.