Revolutionizing Language Models: The Power of Tailored Pretraining with BETR

Recent research conducted by an innovative team from Apple investigates a pivotal aspect of language model development: the alignment of pretraining data with specified target tasks. This method, named benchmark-targeted ranking (BETR), signifies a significant leap forward in optimizing the pretraining processes for large language models (LLMs).

The Essence of BETR

At its core, BETR enhances the selection of pretraining documents to match the needs of specific benchmarks that assess model performance. The traditional methods of data selection often rely on ambiguous criteria, often shaped by researcher intuition about quality. By contrast, BETR operates through a more systematic approach. It embeds benchmark examples along with a subset of pretraining documents, scores these based on similarity, and effectively ranks the entire document pool according to how well they align with the benchmarks' requirements.

Impressive Results Across Multiple Scales

In their experiments, the researchers trained over 500 models across a scale of 1019 to 1022 FLOPs. The results were noteworthy: BETR achieved a performance improvement of 2.1 times over the baseline methods that did not use targeted pretraining and 4.7 times over unfiltered data. These results are not merely statistical; they translate to real improvements across nine of ten benchmarked tasks, highlighting that targeted pretraining yields substantial performance enhancements regardless of model size.

Strategic Data Selection and Its Implications

By directly aligning pretraining with evaluation benchmarks, BETR provides insights into how the choice of training data critically shapes model capabilities. This method offers flexibility. When targeted towards specific benchmarks, models can develop expert-like abilities in narrow areas. Alternatively, when the aim is to develop more generalized abilities, the method can target a diverse array of benchmarks, demonstrating that the balance of specialization versus generalization is achievable through careful data selection.

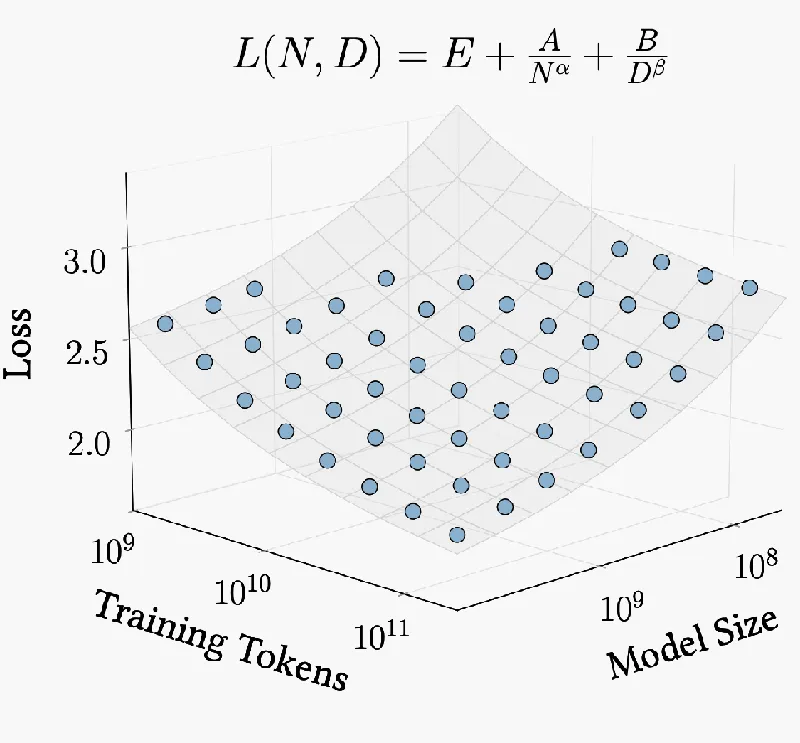

Scaling Insights and Compute Efficiency

The scaling analysis revealed a compelling trend: larger models benefit from less filtered, more diverse datasets, while smaller models perform better with aggressively filtered data. This insight is revolutionary, suggesting that as computational power increases, the method of data selection should adapt accordingly. In essence, BETR lays the groundwork for a more flexible approach to training LLMs that can dynamically adjust to the scale of models being deployed.

Conclusion: A Future of Tailored Learning

As the boundaries of artificial intelligence continue to expand, strategies like BETR mark a significant step towards creating language models that can better understand and respond to human queries in a contextually relevant manner. The implications of this research stretch far beyond academic interest; they hold practical potential for developing smarter, more efficient AI systems capable of meeting the complex demands of various application domains. By making pretraining data selection a targeted, precise science, researchers can unlock new levels of performance in language models, enhancing their utility in real-world applications.