Revolutionizing Robot Object Manipulation with ViTa-Zero: The Game-Changer in 6D Pose Estimation

In the realm of robotics, handling and manipulating objects with precision is paramount, especially when it comes to tasks that require an understanding of an object's position and orientation in space. A groundbreaking approach to this challenge has been introduced in the research paper titled "fViTa-Zero: Zero-shot Visuotactile Object 6D Pose Estimation" by Hongyu Li and colleagues. This innovative framework offers a robust solution for accurately estimating the 6D pose of objects, utilizing both visual and tactile data without the need for extensive prior training on tactile datasets.

The Challenge of 6D Pose Estimation

6D pose estimation refers to the process of determining an object's position and orientation in a three-dimensional space. For robots, achieving this accuracy is vital, particularly during tasks that involve delicate manipulation, like grabbing or passing objects. Traditional visual methods often falter in real-world scenarios characterized by occlusions and dynamic interactions, which pose substantial challenges. The fusion of visual and tactile information, known as visuotactile sensing, has shown promise but is often limited by the availability of tactile datasets.

Introducing ViTa-Zero

ViTa-Zero is a revolutionary framework that allows for zero-shot visuotactile pose estimation. This means it can make accurate estimations even without prior exposure to specific tactile data. The uniqueness of ViTa-Zero lies in its use of a visual model as its backbone while performing feasibility checks and optimizations based on tactile and proprioceptive feedback.

How Does It Work?

The core of ViTa-Zero's functionality revolves around modeling the interaction between the robot's gripper and the object as a spring-mass system. This model incorporates two types of forces: attractive forces that draw the object closer to tactile sensors and repulsive forces that ensure the object does not penetrate the robot's structure. During test time, if a visual estimate fails due to poor visibility or occlusion, the framework refines it by leveraging tactile and proprioceptive inputs to ensure a stable and accurate tracking of the object.

Real-World Impact and Performance

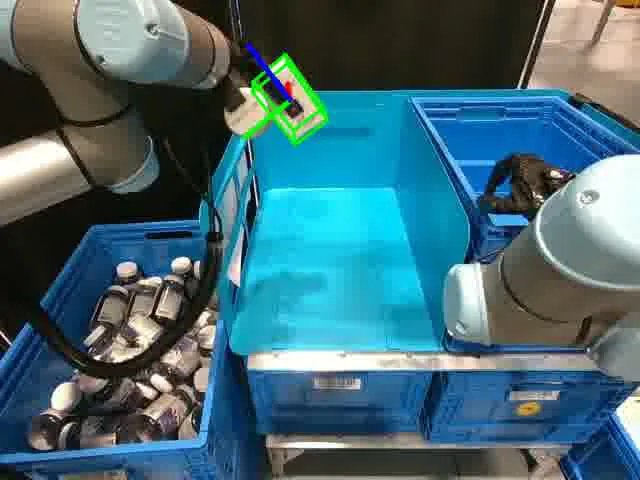

To validate its effectiveness, the researchers equipped a real-world robot setup with ViTa-Zero and conducted various tests. The results were impressive: the approach demonstrated an average increase of 55% in the Area Under Curve (AUC) of the Add-Substituted (ADD-S) metric and 60% in the ADD metric, alongside an 80% reduction in position error when compared to existing models like FoundationPose. These metrics underline ViTa-Zero's potential to significantly enhance object manipulation capabilities in robotic systems.

Conclusion and Future Prospects

ViTa-Zero stands as a remarkable advancement in the field of robotic manipulation, bridging the gap between visual estimation and tactile feedback. By eliminating the dependency on extensive tactile datasets and improving generalization across different manipulation scenarios, this framework opens new avenues for robots to interact more effectively with their environments. Future work could focus on integrating this technology into wider robotic applications, paving the way for more adaptive and intelligent systems.