Transforming Robot Learning: How Latent Policy Steering is Redefining Visuomotor Policies with Minimal Data

In the ever-evolving field of robotics, one of the most pressing challenges remains how to effectively teach robots to perform tasks with minimal real-world data. A recent research paper by Yiqi Wang and colleagues from Carnegie Mellon University delves into this challenge by introducing "Latent Policy Steering" (LPS), a novel method that significantly enhances the learning capabilities of robots.

The Quest for Efficient Learning

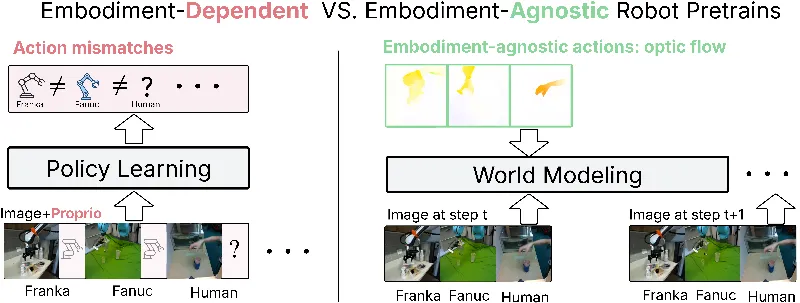

The traditional approach to training robotic systems often relies heavily on collecting vast amounts of data through demonstrations, which can be costly and time-consuming. This research seeks to reduce that burden by leveraging existing and cost-effective datasets, including those obtained from human interactions and various robotic embodiments.

By utilizing a technique known as "optic flow"—which captures visual motion in a way that is independent of the specific robotics embodiment—the authors developed a World Model (WM) that can be pre-trained across diverse datasets. This allows the robot to learn tasks from a broader pool of experiences, making it applicable to new tasks with significantly less training data.

Latent Policy Steering: A New Direction

The key innovation presented in this research is the Latent Policy Steering (LPS) method. LPS improves the performance of behavior-cloned policies by enabling search in the latent space of the World Model for enhanced action sequences. This technique not only enhances efficiency but also ensures that robots can complete tasks with low data, demonstrating over a 50% relative improvement when utilizing just 30 demonstrations.

By treating every successful demonstration as a "goal state," LPS effectively guides the policy towards these optimal outcomes, increasing the likelihood of success significantly, even when the robot has limited access to examples.

Significant Findings and Real-World Applications

The results from Wang and colleagues highlight that their approach vastly improves task success rates in both simulated environments and real-world settings. For instance, policies trained with minimal data from the World Model outperformed benchmarks significantly in tasks such as lifting objects and transporting items with precision.

The implications of this research are profound; it suggests that robots can be trained more effectively without the need for extensive tailored datasets, thus opening avenues for faster and more efficient deployment in various practical applications, ranging from manufacturing to complex home tasks.

Conclusion: A Step Forward in Robotic Learning

The work of Wang et al. sets a new benchmark in the field of robotic learning, providing not just a method but a paradigm shift towards a more data-efficient approach. As robotics continues to integrate more deeply into everyday life, methodologies like Latent Policy Steering will play a crucial role in enabling robots to learn and adapt with unprecedented agility and efficiency.