Unveiling ODHSR: Transforming Monocular Videos into Rich 3D Human-Scene Reconstructions

In an exciting breakthrough for the field of computer vision, researchers have unveiled a novel framework known as Online Dense 3D Reconstruction of Humans and Scenes from Monocular Videos (ODHSR). This innovative approach marks a significant evolution in the way we capture and reconstruct dynamic environments and human figures using just a single camera feed.

The Challenge of Traditional Reconstruction

Traditional methods for 3D reconstruction often rely on complex setups that require multiple cameras or pre-calibrated systems. This poses challenges in real-world applications, where setups must be flexible and efficient. Most existing approaches take significant time to compute and are not designed for online usage, rendering them impractical for real-time interactions.

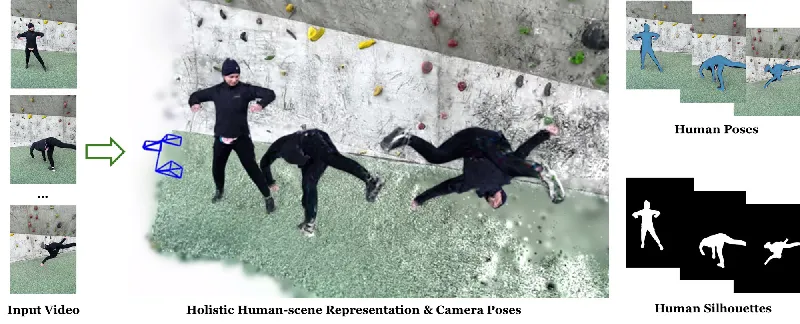

ODHSR steps in to address these limitations. By using just a monocular RGB video, this framework allows for the simultaneous tracking of the camera, estimation of human poses, and detailed reconstruction of both the scene and the human figure—streamlining the process dramatically.

A Unified Framework for Real-Time Reconstruction

The core of ODHSR's efficiency lies in its unified design, which operates under a simultaneous localization and mapping (SLAM) approach. It integrates several components, such as camera tracking, human pose estimation, and the creation of a dense, photorealistic scene representation using advanced techniques like 3D Gaussian Splatting.

With ODHSR, users can achieve perceptive reconstructions that account for intricate human movements and environmental details, all without the extensive training times traditionally required for such outcomes. Notably, this framework is reported to be up to 75 times faster than previous methods.

Key Innovations within ODHSR

One of the standout features of ODHSR is its ability to model human deformation, which faithfully reconstructs the details of clothing and posture regardless of changes in environment or initial conditions. This is particularly crucial for applications that require accurate human representation, such as virtual reality and interactive gaming.

The researchers employed an occlusion-aware rendering technique to enhance the reconstruction quality further, enabling clearer visibility of human silhouettes even in complex scenes with overlapping elements. Coupled with an intelligent framework for camera tracking, this functionality ensures high fidelity in real-time data processing.

Experimental Validation

ODHSR has been rigorously tested against existing state-of-the-art methods across various challenging datasets, including both indoor and outdoor environments. Results showed that ODHSR not only matched the precision of its counterparts but often surpassed them in terms of both efficiency and the quality of rendered images. The model demonstrated superior performance in novel view synthesis and human pose estimation, further establishing its robustness.

Future Implications

The introduction of ODHSR opens new avenues for research and development in robotics, augmented reality, and human-centric computing. By simplifying the process of capturing detailed 3D environments and human figures, it paves the way for future innovations in how we interact with digital spaces.

In conclusion, the ODHSR framework is a game-changer, promising to enhance our understanding and capabilities in 3D reconstruction from monocular videos, and signals a new chapter in the integration of AI within our everyday visual experiences.