VisionThink: A Game Changer in Efficient Vision-Language Models with Smart Dynamic Processing

The latest research paper, titled "fVisionThink: Smart and Efficient Vision Language Model via Reinforcement Learning," introduces a revolutionary approach to how vision-language models (VLMs) process visual tokens. Traditional methods often rely on a fixed number of visual tokens, which can be excessive and redundant. In contrast, VisionThink dynamically adjusts its input resolution, achieving impressive performance without the need for superfluous visual data.

What is VisionThink?

VisionThink is an innovative VLM that leverages reinforcement learning to assess when high-resolution images are necessary for specific tasks. Instead of processing images at full resolution indiscriminately, it starts with downsampled visuals and intelligently decides whether to request a higher-quality version based on the complexity of the task at hand. This is especially beneficial in scenarios requiring high-accuracy visual recognition, such as note-taking or OCR-related applications.

Key Innovations and Advantages

One of the most striking insights from the study is that a significant reduction in visual resolution—by as much as 75%—has minimal impact on performance in most general visual question answering (VQA) tasks. This finding challenges the assumption that more data always leads to better outcomes, showcasing a novel approach to efficiency. By implementing an adaptive strategy for token compression, VisionThink preserves high performance in detail-oriented scenarios while also reducing processing costs.

How Does VisionThink Work?

VisionThink employs a reinforcement learning technique termed "LLM-as-Judge" to validate the accuracy of its output against ground truths. This means that during its training, the model learns from its interpretations and adjusts its decision-making process dynamically based on the effectiveness of its answers. A well-designed reward function encourages the model to request high-resolution images only when absolutely necessary, balancing efficiency and performance effectively.

Significant Results and Findings

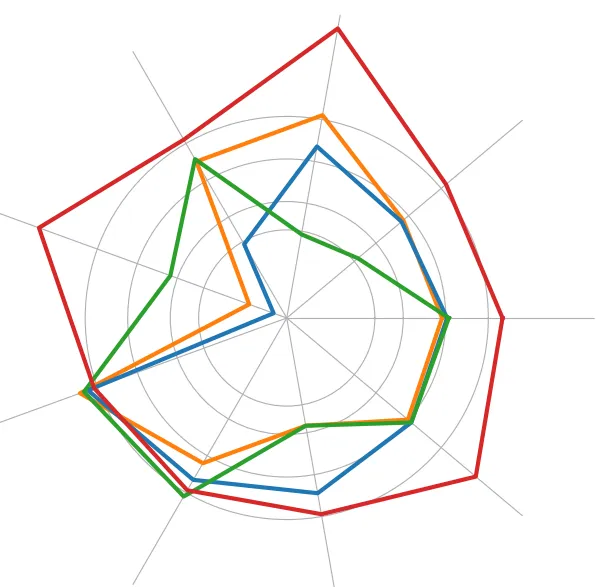

In rigorous testing across various benchmarks, VisionThink consistently outperformed existing state-of-the-art models. Notably, it achieved a remarkable 2400 on the MME benchmark and improved by over 8% on OCR-related tasks, demonstrating superior capabilities in recognizing and interpreting visual information. The study emphasizes that while traditional VLMs struggle with performance drop-offs when visual tokens are compressed, VisionThink's method allows it to smartly determine the need for high resolution.

The Future of Vision-Language Models

The research surrounding VisionThink not only presents an exciting advancement in VLM technology but also suggests a paradigm shift in how we approach machine learning in general. It illustrates that smarter, context-aware models can enhance efficiency significantly without compromising on the detail and accuracy essential for complex visual tasks. The implications for real-world applications—ranging from automated customer service to advanced robotics—are vast, paving the way for more intelligent systems capable of understanding and interacting with the visual world effectively.

VisionThink represents a significant step forward in the pursuit of more sophisticated and tailored AI solutions capable of solving increasingly complex problems in visual comprehension.